Can AI See Safe Streets?

AI is getting good at spotting what makes a street feel safe — from greenery to lighting.

We often talk about how a street feels - safe, busy, or perhaps slightly rough. But can an AI see those same qualities?

Recent research is starting to suggest it can. A study led by Vania Ceccato and colleagues at KTH and MIT used street-view images of Stockholm to teach an AI model to predict how safe different areas look to humans. The results show that visual features like trees, building density, open sky, and visible roads are strong indicators of perceived safety. Streets with greenery and active façades tend to feel safer; those dominated by roads and industry, less so.

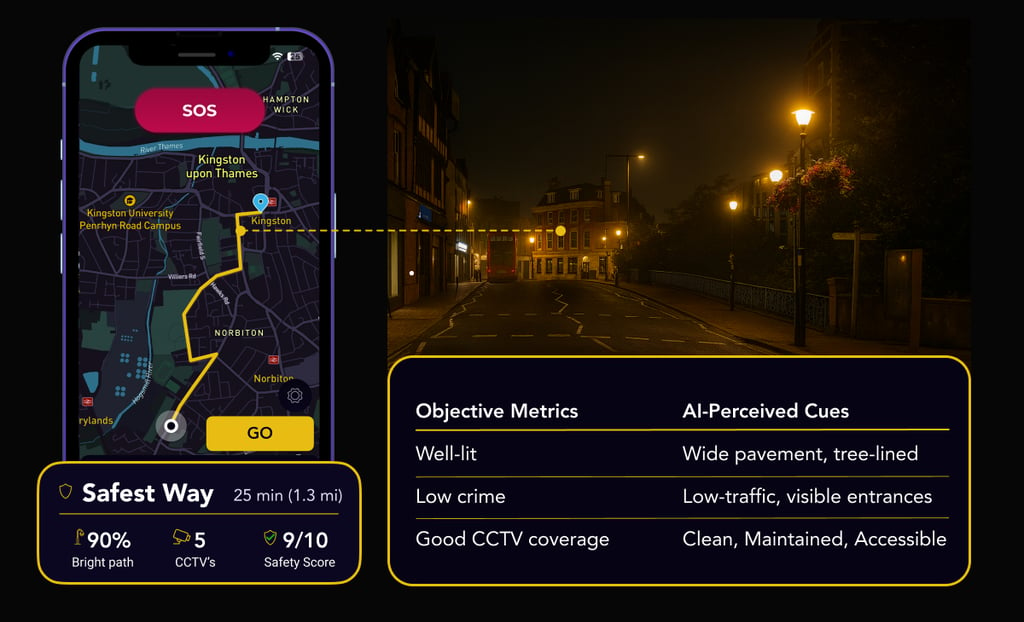

At Safest Way, we’ve been exploring similar questions through our own study, Can CLIP See Safe Streets? Using OpenAI’s CLIP, a vision-language model trained on text–image pairs, we tested whether it can recognise the same visual cues humans associate with safety in London’s streetscapes. Without any retraining, CLIP’s zero-shot classifications of “safe” vs “unsafe” streets aligned with human judgement in 94% of cases. That’s a remarkable sign that modern AI already encodes some of the visual “grammar” of urban safety: lighting, openness, and order.

Of course, perception isn’t the same as reality. AI can tell us which places look safe, but not necessarily which ones are. Still, this line of research opens powerful opportunities. By combining perception-based AI models with real crime and infrastructure data, cities could gain new and scalable ways to identify where design improvements, like better lighting or cleaner and greener streets, might make the biggest difference.

In short: we’re teaching machines to see cities a bit more like people do. The hope is that by learning what safety looks like, we can help create places where safety also feels real.